Privacy, Cost, and Control: Why We Moved Away from Third-Party AI Services

During my time working on a social media platform for kids, I was tasked with working on a service to moderate text messages. I briefly remember from one of our product meetings we discussed that the chat should have at least these functionalities for kids: auto-correction (just like Grammarly) and toxicity detection to make the platform safe for children to use.

The goal was absolutely clear: make the chat system resonate with the product’s mission—safe for children—and add value to their learning. And like every other startup in the game, we also wanted to ride the AI gold wagon.

The Initial AI Approach

We worked on functionality where we sent user text in a throttled mode to an AI API that would tell us about grammatical errors in sentences and give us a toxicity rating for the text. At first, it seemed like a great deal: with a single request, we could get solutions to multiple problems with very minimal infrastructure setup. But soon, that excitement started to fade away.

The Reality Check

We soon realized that sending user chats to third-party companies like OpenAI or Claude was not a good idea for obvious privacy concerns—and definitely not appropriate for a child-safe platform. Now you may ask: “This can be easily solved by self-hosting your own open-source LLM.” But wait for it.

Currently, it was hard for us to monitor AI responses effectively. There was no framework present to know whether the responses provided by the LLM were always accurate, what the error rate was, or—as some experts have coined—what the hallucination rate was. For which test inputs did the AI not work as it should?

Additionally, the cost of tokens was far more than we had imagined. This meant that for every chat happening on the platform, we could potentially lose money. So while using AI seemed to be a good choice at first, it definitely was not when we considered all factors.

Important Clarification

I’m not saying that using AI is bad or that you should avoid it entirely. What I’m trying to convey is that jumping to AI solutions without proper thought and design can be catastrophic. At first, it may look like the best approach for a given problem, but it often isn’t when you account for the various other factors that constitute a complete solution.

Our Solution

After around a day of intensive discussion, we agreed to use open-source models for grammar correction and toxicity detection. We decided to create a TensorFlow FastAPI wrapper, dockerize it inside a container, and run it on our EC2 instances.

By self-hosting these machine learning models, we solved several critical issues:

Privacy Concerns Resolved: The data never left our infrastructure, ensuring complete privacy protection for our young users.

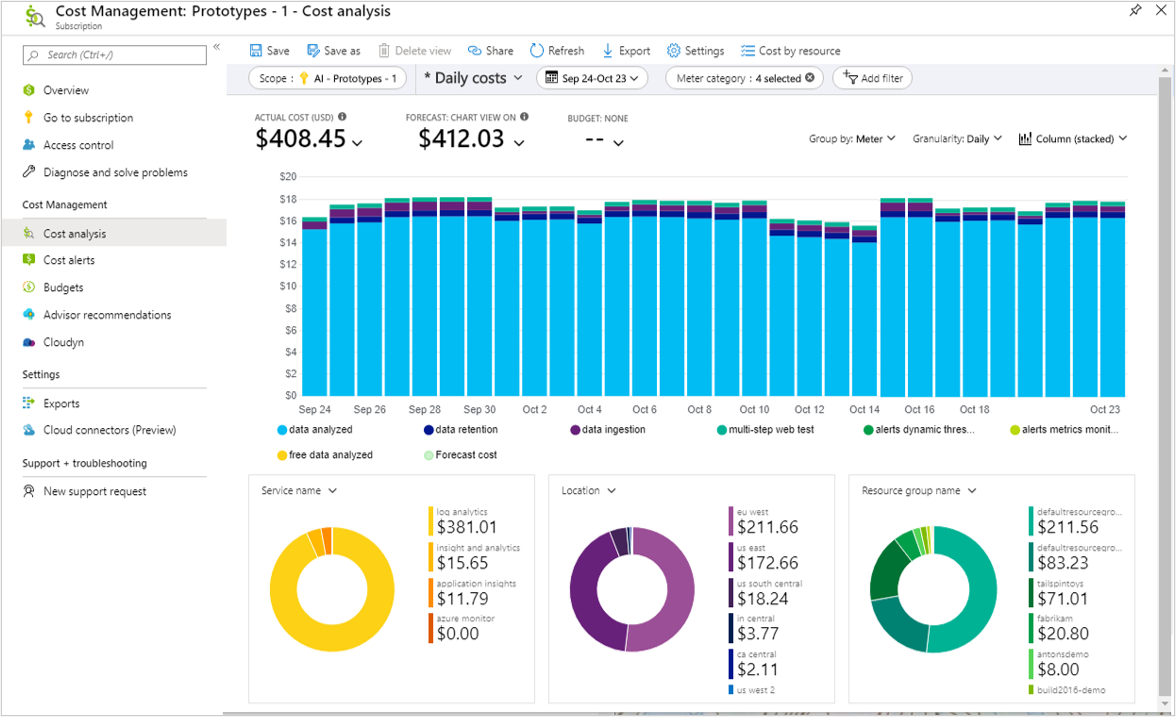

Cost Efficiency: There were no token costs per API transaction. We were only paying for the instance setup, which was significantly less expensive compared to API subscription costs.

Complete Control: We had full control of the service and comprehensive knowledge about API throughput and latency. We could monitor performance, adjust parameters, and optimize for our specific use case.

Reliability: We could guarantee uptime and performance without depending on external services that might experience outages or rate limiting.

Key Takeaways

This solution proved to be fast, inexpensive, and gave us the control and transparency that we lacked when using third-party AI services. The experience taught us valuable lessons about evaluating technology decisions holistically, considering not just the immediate technical benefits but also long-term implications for privacy, cost, control, and reliability.

Sometimes the most sophisticated solution isn’t the best solution—especially for startups where every decision can significantly impact both user trust and financial sustainability.